Introducing UpScan: UpSight’s Digital Zoo (Part I)

In December we had another UpSight Demo Day where we showed our Predict, Interdict and Evict strategy working against live ransomware. Obviously, ransomware is nearly always in the news these days, as such it's not surprising that we would want to show that our strategy can do more than keeping your web tokens safe, it can also stop ransomware attacks!

As part of that demo we wanted to explain why our strategy is adaptable to different kinds of threats, and we gave a brief behind the scenes tour of UpSight and talked about our ‘detonation lab’. I wanted to dig a bit deeper into how we are doing it and what we’ve learned from it so far. This is the first in a series about what we are calling the UpScan Service, the analytics behind it and how we are going to make this tool available for you to use!

First, that word ‘detonation’. Generally, I really like to steer clear of military language, it has its place, but this isn’t it. But like many things, we started using it and it stuck for the moment. UpSight is based on attacker behaviors regardless of how the attacker manifests those behaviors. UpSight is fundamentally different from a traditional signature-based antivirus that is only able to look at the static attributes of a single potentially malicious file, however our ‘detonation lab’ is as close to ‘scanning’ a specific file as we can get. Thus we are going to call this the ‘UpScan Service’.

A healthy environment for malware

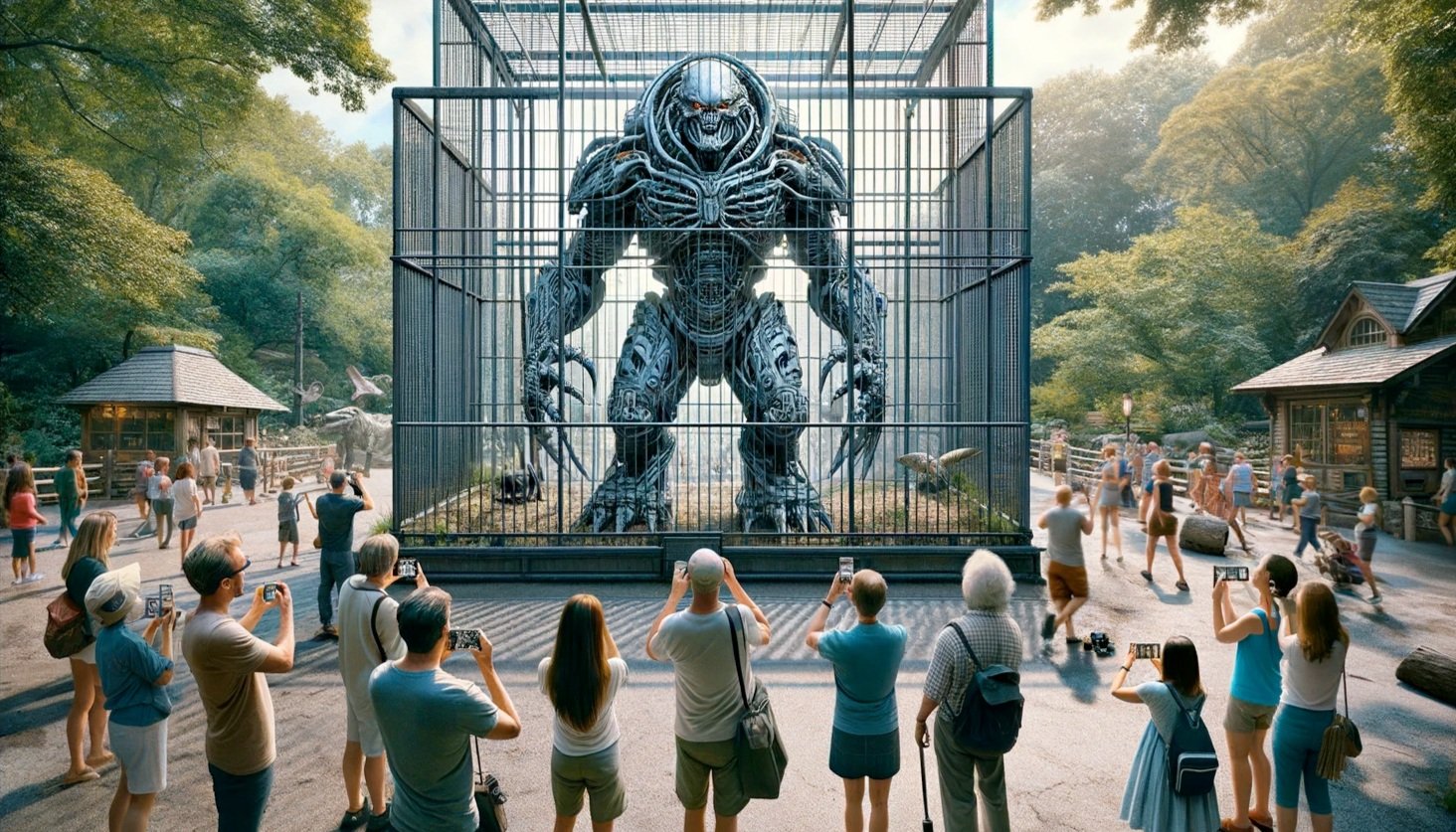

UpScan is a bit like a digital zoo for dangerous malware

While the ethics of keeping animals in zoos is complex, we look at UpScan a bit like trying to operate a zoo with dangerous animals. The purpose is to give us an opportunity to study and learn about their behaviors without being harmed in the process. A good zoo starts with providing a healthy environment.

One of the bigger problems with these sorts of projects is getting malware to not only run but do something ‘interesting’. Many malware will studiously avoid running if they think they are being watched. Frequently this is done with explicit checks for tools or hardware settings associated with the popular VMWare and VBox virtual machine environments. However, we believe that using Microsoft’s Hyper-V gets us around many of these restrictions. A ‘fully secure’ copy of Windows 11 that implements secure boot and device guard is always running within a Hyper-V virtual container in order to keep the core windows security services safe from attacks like mimikatz. Hyper-V is also used to enable some ‘developer’ scenarios like WSL2 - which is essentially running a complete copy of Linux alongside windows. Malware that refuses to do business on Windows 11 is not going to be very successful after all.

UpScan starts by providing a healthy environment with lots of available processing and disk resources contained within a Hyper-V virtual environment. We don’t make any attempts to mask Hyper-V, but we also for obvious reasons have not enabled many of the integration services either.

To keep our UpScan lab safe we take advantage of the nested nature of Hyper-V and run the lab controller infrastructure within a Hyper-V container that itself creates the actual scanning Hyper-V containers. The controller is responsible for taking malware samples from a queue and creating a new instance of the UpScan virtual container (aka our healthy environment) and essentially reaching in and double-clicking on the malware to execute it. When we are done with the UpScan virtual container, the controller just removes all resources effectively throwing it away. UpScan containers are always created from a fresh instance of a static read only snapshot. Periodically the entire controller container is reset as well.

The UpScan controller also provides an unfiltered internet connection from the UpScan containers though a Hyper-V virtual switch connected to a commercial privacy VPN, such as you might see for evading commercial and government content filtering geo-fences. This provides an anonymous connection from within the UpScan lab environment to the broader internet that is not associated with UpSight. Traffic from the virtual switch is further routed through a virtual firewall that will drop all non-VPN encapsulated traffic. And finally, there is a hardware firewall that the physical lab machines are connected through that again only allows outbound traffic encapsulated within the VPN direct to our ISP from our physical UpScan lab network.

Block diagram of the UpScan lab setup

The net of this is that malware is free to live and operate in as natural a manner as possible, including being allowed to send and receive command and control messages. Much like a well-run zoo, we leave opportunities for ‘enrichment’ in hopes of seeing natural behaviors. We install a bunch of popular applications and leave post authentication credentials lying about (well expired ones… but that doesn’t typically stop anybody from trying to steal them) and some very important documents in various typical locations (largely concerning cats and how cute they are).

Making Observations with UpSight

Now that we’ve built a fairly welcoming and secure environment to run our malware, we need some observation tools. The same UpSight client that we use to protect endpoints from threats actually makes a pretty good observation tool. Normally UpSight first looks at a low-level event and attempts to classify if it is an ‘Attack Word’ - basically answering the question - does this event represent a tactic in the MITRE ATT&CK(™) framework? UpSight normally only keeps track of events that are classified as Attack Words. However, since the UpScan lab is about learning new things, we use an additional policy that asks the UpSight client to collect event data with a much broader scope. This additional collection policy makes the UpSight client much more similar to a typical Enterprise Detection & Response (EDR) sensor, in that it will collect most events that in some way modify the system even if they are not classified as Attack Words by the UpSight AI model. This extra data allows us to have the information necessary to see if we need to add a new Attack Word to our lexicon.

While we want to give malware a healthy environment to observe its behavior within, what we don’t do is give any given UpScan run an infinite amount of time. We don’t want our UpScan lab to become part of some threat actor’s bot army attempting to perform DDOS attacks or anything like that after all… and we got other things to do too! We’ve been experimentally determining how much time is enough time and have come up with this heuristic:

If UpSight Predicts, Interdicts and Evicts the sample - we’ve seen enough. It's really exciting to see how often we take this path!

If the sample does some level of AW behavior, but not quite to the point of being able to make solid predictions about it, we give it 15 minutes or so to finish.

If the sample doesn’t run… well that happens. Often we get samples taken out of context and they are missing important dependencies, or are just outright buggy. No execution (buggy or missing some dependency) or no activity (it's shy) within the first few minutes, we aren’t likely to see much and we are done. About 40% of the samples we’ve run end up falling into this ‘did not run’ category.

And now that we have some observations, we need to learn from them. I’ll cover UpScan analytics in the next post in this series!